Content

[](https://mseep.ai/app/cnych-mcp-sse-demo)

# Developing MCP Services of SSE Type

[MCP](https://www.claudemcp.com/) supports two communication transport methods: `STDIO` (standard input/output) or `SSE` (Server-Sent Events), both of which use `JSON-RPC 2.0` for message formatting. `STDIO` is used for local integration, while `SSE` is used for network-based communication.

For example, if we want to use the MCP service directly in the command line, we can use the `STDIO` transport method. If we want to use the MCP service on a web page, we can use the `SSE` transport method.

Next, we will develop an intelligent shopping mall service assistant based on MCP, using the SSE type of MCP service, which has the following core functionalities:

- Real-time access to product information and inventory levels, supporting custom orders.

- Recommending products based on customer preferences and available inventory.

- Real-time interaction with microservices using the MCP tool server.

- Checking real-time inventory levels when answering product inquiries.

- Facilitating product purchases using product ID and quantity.

- Real-time updating of inventory levels.

- Providing temporary analysis of order transactions through natural language queries.

> Here we use the Anthropic Claude 3.5 Sonnet model as the AI assistant for the MCP service, but other models that support tool invocation can also be chosen.

First, we need a product microservice to expose an API interface for a product list. Then we provide an order microservice to expose an API interface for order creation, inventory information, etc.

The next core component is the MCP SSE server, which exposes product microservice and order microservice data to the LLM, serving as a tool using the SSE protocol.

Finally, we will use the MCP client to connect to the MCP SSE server via the SSE protocol and interact with the LLM.

## Microservices

Next, we will start developing the product microservice and order microservice, exposing API interfaces.

First, define the types for products, inventory, and orders.

```typescript

// types/index.ts

export interface Product {

id: number;

name: string;

price: number;

description: string;

}

export interface Inventory {

productId: number;

quantity: number;

product?: Product;

}

export interface Order {

id: number;

customerName: string;

items: Array<{ productId: number; quantity: number }>;

totalAmount: number;

orderDate: string;

}

```

Then we can use Express to expose the product microservice and order microservice, providing API interfaces. Since we are using mock data, we will simulate it with simpler in-memory data and expose it through the following functions. (In a production environment, we still need to implement it using microservices with a database.)

```typescript

// services/product-service.ts

import { Product, Inventory, Order } from "../types/index.js";

// Mock data storage

let products: Product[] = [

{

id: 1,

name: "Smart Watch Galaxy",

price: 1299,

description: "Health monitoring, sports tracking, supports various applications",

},

{

id: 2,

name: "Wireless Bluetooth Headphones Pro",

price: 899,

description: "Active noise cancellation, 30 hours battery life, IPX7 waterproof",

},

{

id: 3,

name: "Portable Power Bank",

price: 299,

description: "20000mAh large capacity, supports fast charging, lightweight design",

},

{

id: 4,

name: "Huawei MateBook X Pro",

price: 1599,

description: "14.2-inch full screen, 3:2 aspect ratio, 100% sRGB color gamut",

},

];

// Mock inventory data

let inventory: Inventory[] = [

{ productId: 1, quantity: 100 },

{ productId: 2, quantity: 50 },

{ productId: 3, quantity: 200 },

{ productId: 4, quantity: 150 },

];

let orders: Order[] = [];

export async function getProducts(): Promise<Product[]> {

return products;

}

export async function getInventory(): Promise<Inventory[]> {

return inventory.map((item) => {

const product = products.find((p) => p.id === item.productId);

return {

...item,

product,

};

});

}

export async function getOrders(): Promise<Order[]> {

return [...orders].sort(

(a, b) => new Date(b.orderDate).getTime() - new Date(a.orderDate).getTime()

);

}

export async function createPurchase(

customerName: string,

items: { productId: number; quantity: number }[]

): Promise<Order> {

if (!customerName || !items || items.length === 0) {

throw new Error("Invalid request: missing customer name or product");

}

let totalAmount = 0;

// Validate inventory and calculate total price

for (const item of items) {

const inventoryItem = inventory.find((i) => i.productId === item.productId);

const product = products.find((p) => p.id === item.productId);

if (!inventoryItem || !product) {

throw new Error(`Product ID ${item.productId} does not exist`);

}

if (inventoryItem.quantity < item.quantity) {

throw new Error(

`Product ${product.name} is out of stock. Available: ${inventoryItem.quantity}`

);

}

totalAmount += product.price * item.quantity;

}

// Create order

const order: Order = {

id: orders.length + 1,

customerName,

items,

totalAmount,

orderDate: new Date().toISOString(),

};

// Update inventory

items.forEach((item) => {

const inventoryItem = inventory.find(

(i) => i.productId === item.productId

)!;

inventoryItem.quantity -= item.quantity;

});

orders.push(order);

return order;

}

```

Then we can expose these API interfaces using the MCP tools as follows:

```typescript

// mcp-server.ts

import { McpServer } from "@modelcontextprotocol/sdk/server/mcp.js";

import { z } from "zod";

import {

getProducts,

getInventory,

getOrders,

createPurchase,

} from "./services/product-service.js";

export const server = new McpServer({

name: "mcp-sse-demo",

version: "1.0.0",

description: "MCP tool for product queries, inventory management, and order processing",

});

// Tool to get product list

server.tool("getProducts", "Get all product information", {}, async () => {

console.log("Fetching product list");

const products = await getProducts();

return {

content: [

{

type: "text",

text: JSON.stringify(products),

},

],

};

});

// Tool to get inventory information

server.tool("getInventory", "Get inventory information for all products", {}, async () => {

console.log("Fetching inventory information");

const inventory = await getInventory();

return {

content: [

{

type: "text",

text: JSON.stringify(inventory),

},

],

};

});

// Tool to get order list

server.tool("getOrders", "Get all order information", {}, async () => {

console.log("Fetching order list");

const orders = await getOrders();

return {

content: [

{

type: "text",

text: JSON.stringify(orders),

},

],

};

});

// Tool to purchase products

server.tool(

"purchase",

"Purchase products",

{

items: z

.array(

z.object({

productId: z.number().describe("Product ID"),

quantity: z.number().describe("Purchase quantity"),

})

)

.describe("List of products to purchase"),

customerName: z.string().describe("Customer name"),

},

async ({ items, customerName }) => {

console.log("Processing purchase request", { items, customerName });

try {

const order = await createPurchase(customerName, items);

return {

content: [

{

type: "text",

text: JSON.stringify(order),

},

],

};

} catch (error: any) {

return {

content: [

{

type: "text",

text: JSON.stringify({ error: error.message }),

},

],

};

}

}

);

```

Here we have defined 4 tools:

- `getProducts`: Get all product information

- `getInventory`: Get inventory information for all products

- `getOrders`: Get all order information

- `purchase`: Purchase products

If it were a Stdio type of MCP service, we could directly use these tools in the command line. However, since we need to use the SSE type of MCP service, we also need an MCP SSE server to expose these tools.

## MCP SSE Server

Next, we will develop the MCP SSE server to expose product microservice and order microservice data, serving as a tool using the SSE protocol.

```typescript

// mcp-sse-server.ts

import express, { Request, Response, NextFunction } from "express";

import cors from "cors";

import { SSEServerTransport } from "@modelcontextprotocol/sdk/server/sse.js";

import { server as mcpServer } from "./mcp-server.js"; // Renamed to avoid naming conflicts

const app = express();

app.use(

cors({

origin: process.env.ALLOWED_ORIGINS?.split(",") || "*",

methods: ["GET", "POST"],

allowedHeaders: ["Content-Type", "Authorization"],

})

);

// Store active connections

const connections = new Map();

// Health check endpoint

app.get("/health", (req, res) => {

res.status(200).json({

status: "ok",

version: "1.0.0",

uptime: process.uptime(),

timestamp: new Date().toISOString(),

connections: connections.size,

});

});

// SSE connection establishment endpoint

app.get("/sse", async (req, res) => {

// Instantiate SSE transport object

const transport = new SSEServerTransport("/messages", res);

// Get sessionId

const sessionId = transport.sessionId;

console.log(`[${new Date().toISOString()}] New SSE connection established: ${sessionId}`);

// Register connection

connections.set(sessionId, transport);

// Handle connection interruption

req.on("close", () => {

console.log(`[${new Date().toISOString()}] SSE connection closed: ${sessionId}`);

connections.delete(sessionId);

});

// Connect transport object to MCP server

await mcpServer.connect(transport);

console.log(`[${new Date().toISOString()}] MCP server connection successful: ${sessionId}`);

});

// Endpoint to receive client messages

app.post("/messages", async (req: Request, res: Response) => {

try {

console.log(`[${new Date().toISOString()}] Received client message:`, req.query);

const sessionId = req.query.sessionId as string;

// Find the corresponding SSE connection and process the message

if (connections.size > 0) {

const transport: SSEServerTransport = connections.get(

sessionId

) as SSEServerTransport;

// Use transport to process the message

if (transport) {

await transport.handlePostMessage(req, res);

} else {

throw new Error("No active SSE connection");

}

} else {

throw new Error("No active SSE connection");

}

} catch (error: any) {

console.error(`[${new Date().toISOString()}] Failed to process client message:`, error);

res.status(500).json({ error: "Failed to process message", message: error.message });

}

});

// Gracefully close all connections

async function closeAllConnections() {

console.log(

`[${new Date().toISOString()}] Closing all connections (${connections.size} connections)`

);

for (const [id, transport] of connections.entries()) {

try {

// Send close event

transport.res.write(

'event: server_shutdown\ndata: {"reason": "Server is shutting down"}\n\n'

);

transport.res.end();

console.log(`[${new Date().toISOString()}] Closed connection: ${id}`);

} catch (error) {

console.error(`[${new Date().toISOString()}] Failed to close connection: ${id}`, error);

}

}

connections.clear();

}

// Error handling

app.use((err: Error, req: Request, res: Response, next: NextFunction) => {

console.error(`[${new Date().toISOString()}] Unhandled exception:`, err);

res.status(500).json({ error: "Internal server error" });

});

// Graceful shutdown

process.on("SIGTERM", async () => {

console.log(`[${new Date().toISOString()}] Received SIGTERM signal, preparing to shut down`);

await closeAllConnections();

server.close(() => {

console.log(`[${new Date().toISOString()}] Server has shut down`);

process.exit(0);

});

});

process.on("SIGINT", async () => {

console.log(`[${new Date().toISOString()}] Received SIGINT signal, preparing to shut down`);

await closeAllConnections();

process.exit(0);

});

// Start server

const port = process.env.PORT || 8083;

const server = app.listen(port, () => {

console.log(

`[${new Date().toISOString()}] Intelligent Shopping Mall MCP SSE Server started at: http://localhost:${port}`

);

console.log(`- SSE connection endpoint: http://localhost:${port}/sse`);

console.log(`- Message handling endpoint: http://localhost:${port}/messages`);

console.log(`- Health check endpoint: http://localhost:${port}/health`);

});

```

Here we use Express to expose an SSE connection endpoint `/sse` for receiving client messages. We use `SSEServerTransport` to create an SSE transport object and specify the message handling endpoint as `/messages`.

```typescript

const transport = new SSEServerTransport("/messages", res);

```

After creating the transport object, we can connect it to the MCP server as follows:

```typescript

// Connect transport object to MCP server

await mcpServer.connect(transport);

```

This way, we can receive client messages through the SSE connection endpoint `/sse` and use the message handling endpoint `/messages` to process client messages. When a client message is received at the `/messages` endpoint, we need to use the `transport` object to handle the client message:

```typescript

// Use transport to process the message

await transport.handlePostMessage(req, res);

```

This is what we commonly refer to as listing tools, invoking tools, and other operations.

## MCP Client

Next, we will develop the MCP client to connect to the MCP SSE server and interact with the LLM. We can develop a command-line client or a web client.

For the command-line client, we have already introduced it earlier. The only difference is that we now need to use the SSE protocol to connect to the MCP SSE server.

```typescript

// Create MCP client

const mcpClient = new McpClient({

name: "mcp-sse-demo",

version: "1.0.0",

});

// Create SSE transport object

const transport = new SSEClientTransport(new URL(config.mcp.serverUrl));

// Connect to MCP server

await mcpClient.connect(transport);

```

Then other operations are the same as those introduced in the command-line client, which means listing all tools and sending the user's questions along with the tools to the LLM for processing. After the LLM returns the results, we call the tools based on the results, sending the tool invocation results and historical messages back to the LLM for processing to obtain the final result.

For the web client, it is also basically the same as the command-line client, except that we now implement these processing steps in some interfaces and call these interfaces through the web page.

First, we need to initialize the MCP client, then get all tools, and convert the tool format to the array format required by Anthropic, and then create the Anthropic client.

```typescript

// Initialize MCP client

async function initMcpClient() {

if (mcpClient) return;

try {

console.log("Connecting to MCP server...");

mcpClient = new McpClient({

name: "mcp-client",

version: "1.0.0",

});

const transport = new SSEClientTransport(new URL(config.mcp.serverUrl));

await mcpClient.connect(transport);

const { tools } = await mcpClient.listTools();

// Convert tool format to the array format required by Anthropic

anthropicTools = tools.map((tool: any) => {

return {

name: tool.name,

description: tool.description,

input_schema: tool.inputSchema,

};

});

// Create Anthropic client

aiClient = createAnthropicClient(config);

console.log("MCP client and tools initialized successfully");

} catch (error) {

console.error("Failed to initialize MCP client:", error);

throw error;

}

}

```

Next, we can develop API interfaces based on our own needs. For example, we can develop a chat interface to receive user questions, then call the MCP client's tools, send the tool invocation results and historical messages to the LLM for processing, and obtain the final result. The code is as follows:

```typescript

// API: Chat request

apiRouter.post("/chat", async (req, res) => {

try {

const { message, history = [] } = req.body;

if (!message) {

console.warn("Message in request is empty");

return res.status(400).json({ error: "Message cannot be empty" });

}

// Build message history

const messages = [...history, { role: "user", content: message }];

// Call AI

const response = await aiClient.messages.create({

model: config.ai.defaultModel,

messages,

tools: anthropicTools,

max_tokens: 1000,

});

// Handle tool invocation

const hasToolUse = response.content.some(

(item) => item.type === "tool_use"

);

if (hasToolUse) {

// Handle all tool invocations

const toolResults = [];

for (const content of response.content) {

if (content.type === "tool_use") {

const name = content.name;

const toolInput = content.input as

| { [x: string]: unknown }

| undefined;

try {

// Call MCP tool

if (!mcpClient) {

console.error("MCP client not initialized");

throw new Error("MCP client not initialized");

}

console.log(`Starting to call MCP tool: ${name}`);

const toolResult = await mcpClient.callTool({

name,

arguments: toolInput,

});

toolResults.push({

name,

result: toolResult,

});

} catch (error: any) {

console.error(`Tool invocation failed: ${name}`, error);

toolResults.push({

name,

error: error.message,

});

}

}

}

// Send tool results back to AI for final reply

const finalResponse = await aiClient.messages.create({

model: config.ai.defaultModel,

messages: [

...messages,

{

role: "user",

content: JSON.stringify(toolResults),

},

],

max_tokens: 1000,

});

const textResponse = finalResponse.content

.filter((c) => c.type === "text")

.map((c) => c.text)

.join("\n");

res.json({

response: textResponse,

toolCalls: toolResults,

});

} else {

// Directly return AI reply

const textResponse = response.content

.filter((c) => c.type === "text")

.map((c) => c.text)

.join("\n");

res.json({

response: textResponse,

toolCalls: [],

});

}

} catch (error: any) {

console.error("Failed to process chat request:", error);

res.status(500).json({ error: error.message });

}

});

```

The core implementation here is also quite simple, and it is basically the same as the command-line client. The only difference is that we have now implemented these processing steps in some interfaces.

## Usage

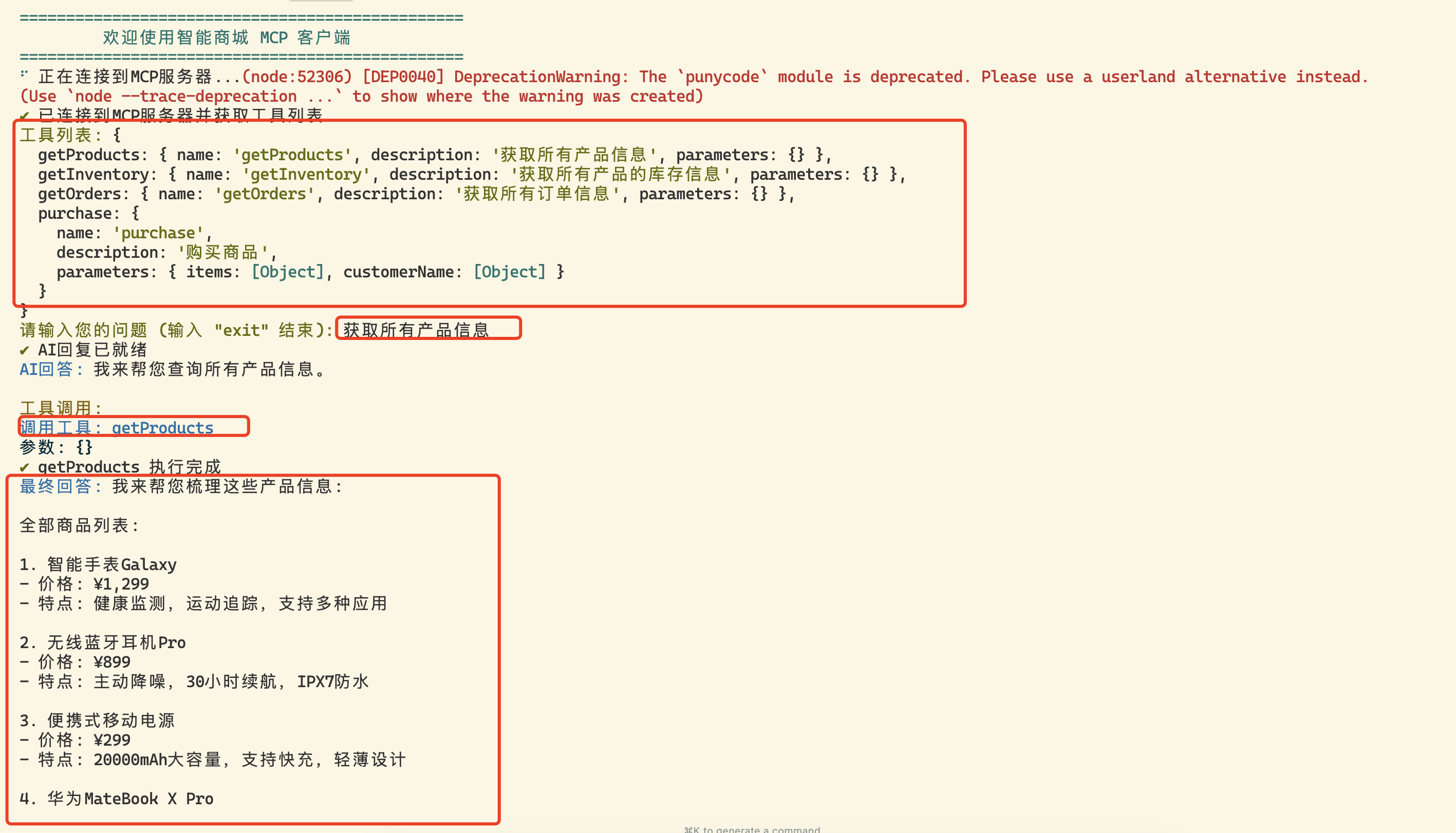

Here is an example of using the command-line client:

Of course, we can also use it in Cursor by creating a `.cursor/mcp.json` file and adding the following content:

```json

{

"mcpServers": {

"products-sse": {

"url": "http://localhost:8083/sse"

}

}

}

```

Then we can see this MCP service in the settings page of Cursor, and we can use this MCP service in Cursor.

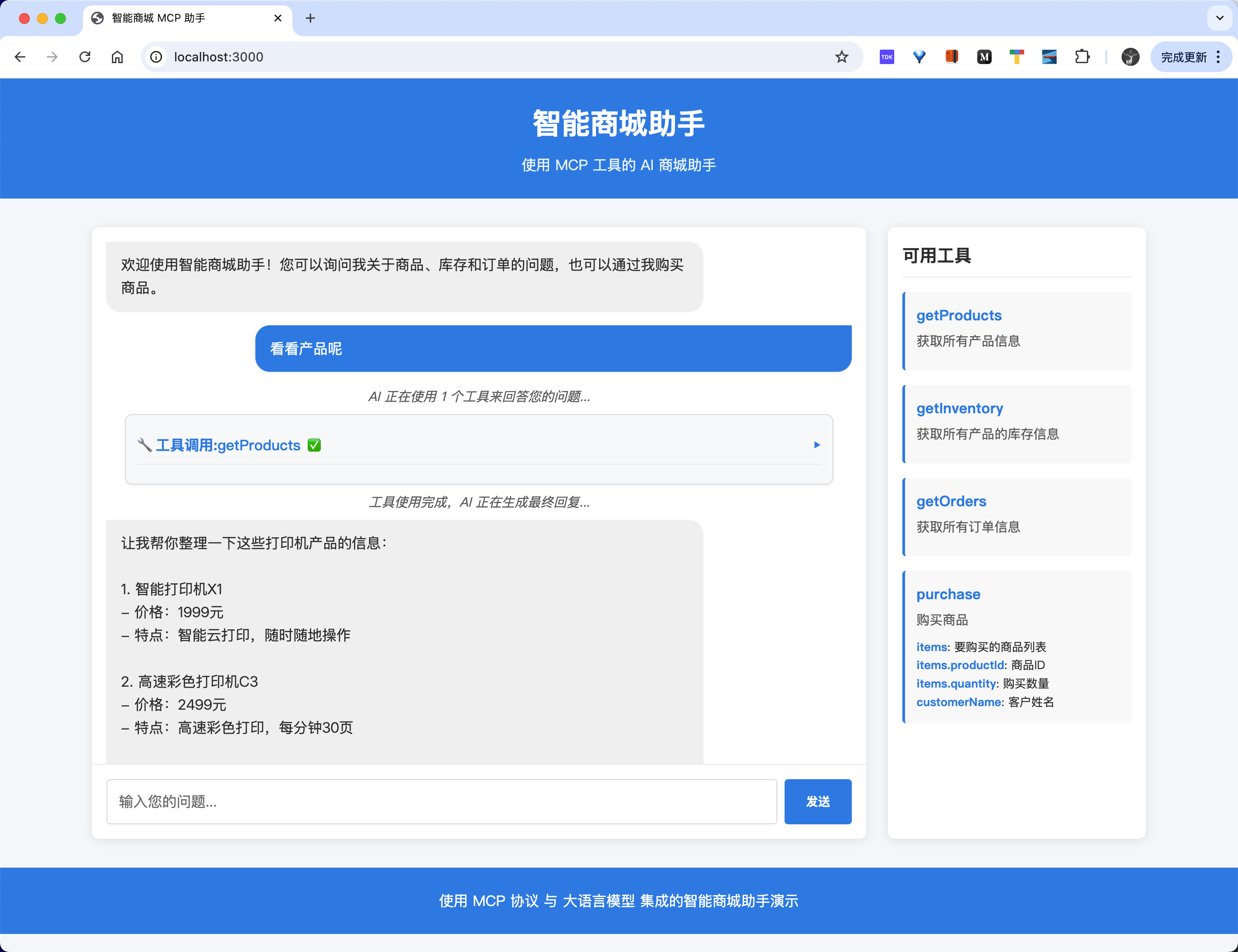

Here is an example of using the web client we developed:

## Debugging

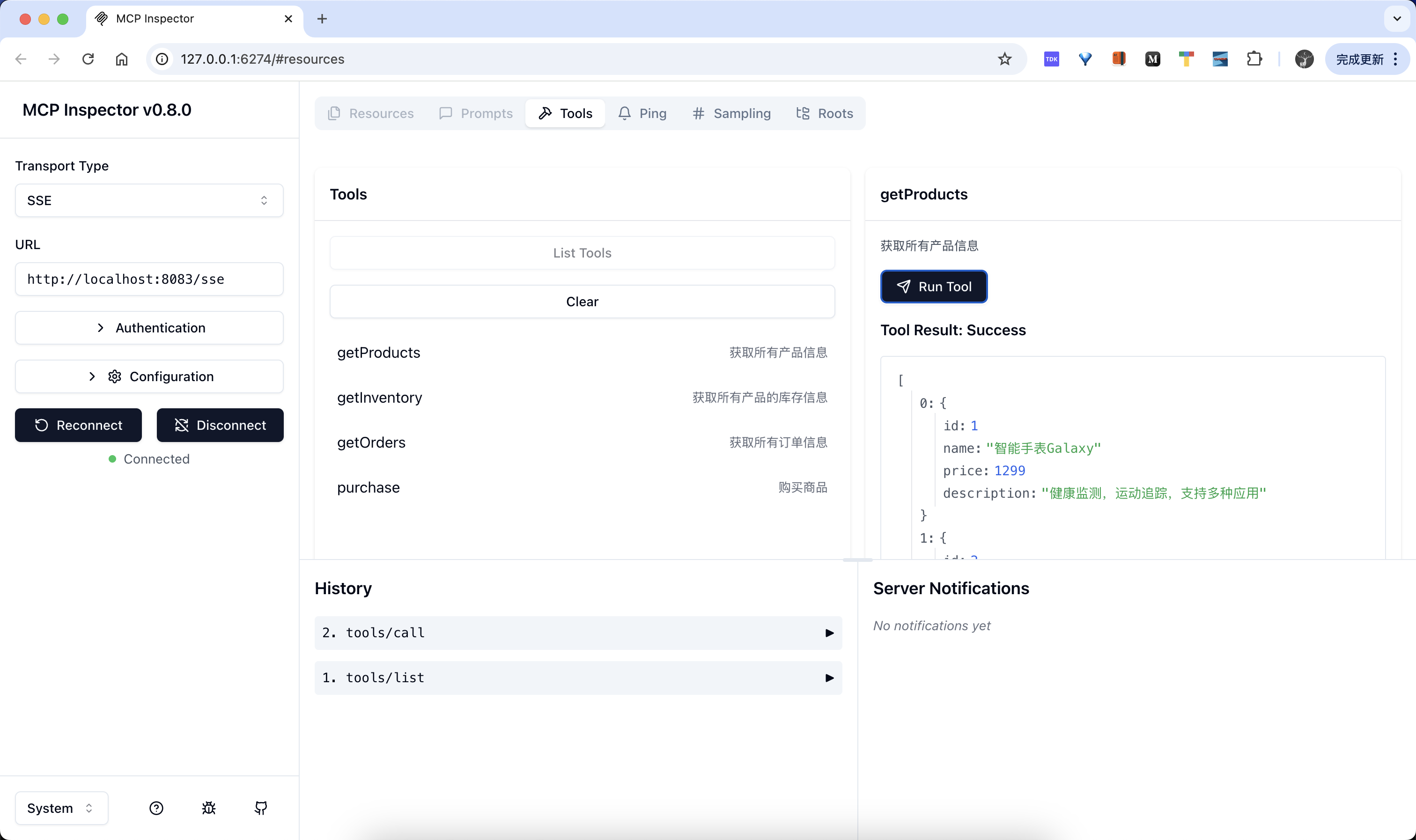

We can also use the `npx @modelcontextprotocol/inspector` command to debug our SSE service:

```bash

$ npx @modelcontextprotocol/inspector

Starting MCP inspector...

⚙️ Proxy server listening on port 6277

🔍 MCP Inspector is up and running at http://127.0.0.1:6274 🚀

```

Then open the above address in the browser, select SSE, and configure our SSE address to test:

## Summary

When the LLM decides to trigger a call to user tools, the quality of tool descriptions is crucial:

- **Precise Description**: Ensure that each tool's description is clear and includes keywords for the LLM to correctly identify when to use that tool.

- **Avoid Conflicts**: Do not provide multiple tools with similar functionalities, as this may lead to incorrect selections by the LLM.

- **Testing and Validation**: Test the accuracy of tool invocations using various user query scenarios before deployment.

MCP servers can be implemented using various technologies:

- Python SDK

- TypeScript/JavaScript

- Other programming languages

The choice should be based on the team's familiarity and existing technology stack.

Additionally, integrating AI assistants with MCP servers into existing microservice architectures has the following advantages:

1. **Real-time Data**: Provides real-time or near-real-time updates through SSE (Server-Sent Events), which is particularly important for dynamic data such as inventory information and order status.

2. **Scalability**: Different parts of the system can be scaled independently, for example, frequently used inventory check services can be scaled separately.

3. **Resilience**: The failure of a single microservice will not affect the operation of the entire system, ensuring system stability.

4. **Flexibility**: Different teams can handle different parts of the system independently, using different technology stacks if necessary.

5. **Efficient Communication**: SSE is more efficient than continuous polling, sending updates only when data changes.

6. **Enhanced User Experience**: Real-time updates and quick responses improve customer satisfaction.

7. **Simplified Client**: Client code is cleaner, without complex polling mechanisms, only needing to listen for server events.

Of course, if we want to use it in a production environment, we also need to consider the following points:

- Conduct comprehensive testing to identify potential errors.

- Design fault recovery mechanisms.

- Implement monitoring systems to track tool invocation performance and accuracy.

- Consider adding a caching layer to reduce the load on backend services.

Through these practices, we can build an efficient and reliable MCP-based intelligent shopping mall service assistant, providing users with a real-time, personalized shopping experience.

---

2025.05.28. Update, using OpenAI LLM in the client. Refer to [Developing MCP Server and Client with MCP Python SDK](https://www.claudemcp.com/zh/docs/mcp-py-sdk-basic).

Previously, we used the combination of TypeScript + Claude + MCP + SSE. Some issues mentioned how to replace it with OpenAI's large model. Below, we implement a simple OpenAI-based MCP client using the MCP Python SDK.

The MCP Python SDK provides a high-level client interface for connecting to MCP servers in various ways, as shown in the following code:

```python

from mcp import ClientSession, StdioServerParameters, types

from mcp.client.stdio import stdio_client

# Create stdio type MCP server parameters

server_params = StdioServerParameters(

command="python", # Executable file

args=["example_server.py"], # Optional command line arguments

env=None, # Optional environment variables

)

async def run():

async with stdio_client(server_params) as (read, write): # Create a stdio type client

async with ClientSession(read, write) as session: # Create a client session

# Initialize connection

await session.initialize()

# List available prompts

prompts = await session.list_prompts()

# Get a prompt

prompt = await session.get_prompt(

"example-prompt", arguments={"arg1": "value"}

)

# List available resources

resources = await session.list_resources()

# List available tools

tools = await session.list_tools()

# Read a resource

content, mime_type = await session.read_resource("file://some/path")

# Call a tool

result = await session.call_tool("tool-name", arguments={"arg1": "value"})

if __name__ == "__main__":

import asyncio

asyncio.run(run())

```

In the above code, we create a stdio type MCP client and use the `stdio_client` function to create a client session. Then, we initialize the connection using `session.initialize()`, list available prompts using `session.list_prompts()`, get a prompt using `session.get_prompt()`, list available resources using `session.list_resources()`, list available tools using `session.list_tools()`, read a resource using `session.read_resource()`, and call a tool using `session.call_tool()`. These are common methods of the MCP client.

However, in actual MCP clients or hosts, we generally combine LLM to achieve more intelligent interactions. For example, if we want to implement an OpenAI-based MCP client, how do we do it? We can refer to the Cursor approach:

- First, configure the MCP server through a JSON configuration file.

- Read this configuration file to load the list of MCP servers.

- Get the list of available tools provided by the MCP server.

- Then, based on user input and the Tools list, pass it to the LLM (if the LLM does not support tool invocation, then we need to inform the LLM how to call these tools in the System prompt).

- Based on the LLM's return results, loop through all the tools provided by the MCP servers.

- After obtaining the return results of the MCP tools, we can send the return results to the LLM to get a response that better matches the user's intent.

This process aligns more closely with our actual interaction flow. Below, we implement a simple MCP client based on OpenAI.

First, initialize a uv-managed project with the following command:

```bash

uv init mymcp --python 3.13

cd mymcp

```

Then install the MCP Python SDK dependencies:

```bash

uv add "mcp[cli]"

uv add openai

uv add rich

```

The complete code is as follows:

```python

#!/usr/bin/env python

"""

MyMCP Client - Using OpenAI native tools invocation

"""

import asyncio

import json

import os

import sys

from typing import Dict, List, Any, Optional

from dataclasses import dataclass

from openai import AsyncOpenAI

from mcp import StdioServerParameters

from mcp.client.stdio import stdio_client

from mcp.client.session import ClientSession

from mcp.types import Tool, TextContent

from rich.console import Console

from rich.prompt import Prompt

from rich.panel import Panel

from rich.markdown import Markdown

from rich.table import Table

from rich.spinner import Spinner

from rich.live import Live

from dotenv import load_dotenv

# Load environment variables

load_dotenv()

# Initialize Rich console

console = Console()

@dataclass

class MCPServerConfig:

"""MCP Server Configuration"""

name: str

command: str

args: List[str]

description: str

env: Optional[Dict[str, str]] = None

class MyMCPClient:

"""MyMCP Client"""

def __init__(self, config_path: str = "mcp.json"):

self.config_path = config_path

self.servers: Dict[str, MCPServerConfig] = {}

self.all_tools: List[tuple[str, Any]] = [] # (server_name, tool)

self.openai_client = AsyncOpenAI(

api_key=os.getenv("OPENAI_API_KEY")

)

def load_config(self):

"""Load MCP server configuration from the config file"""

try:

with open(self.config_path, 'r', encoding='utf-8') as f:

config = json.load(f)

for name, server_config in config.get("mcpServers", {}).items():

env_dict = server_config.get("env", {})

self.servers[name] = MCPServerConfig(

name=name,

command=server_config["command"],

args=server_config.get("args", []),

description=server_config.get("description", ""),

env=env_dict if env_dict else None

)

console.print(f"[green]✓ Loaded {len(self.servers)} MCP server configurations[/green]")

except Exception as e:

console.print(f"[red]✗ Failed to load config file: {e}[/red]")

sys.exit(1)

async def get_tools_from_server(self, name: str, config: MCPServerConfig) -> List[Tool]:

"""Get tool list from a single server"""

try:

console.print(f"[blue]→ Connecting to server: {name}[/blue]")

# Prepare environment variables

env = os.environ.copy()

if config.env:

env.update(config.env)

# Create server parameters

server_params = StdioServerParameters(

command=config.command,

args=config.args,

env=env

)

# Use async with context manager (double nesting)

async with stdio_client(server_params) as (read, write):

async with ClientSession(read, write) as session:

await session.initialize()

# Get tool list

tools_result = await session.list_tools()

tools = tools_result.tools

console.print(f"[green]✓ {name}: {len(tools)} tools[/green]")

return tools

except Exception as e:

console.print(f"[red]✗ Failed to connect to server {name}: {e}[/red]")

console.print(f"[red] Error type: {type(e).__name__}[/red]")

import traceback

console.print(f"[red] Detailed error: {traceback.format_exc()}[/red]")

return []

async def load_all_tools(self):

"""Load tools from all servers"""

console.print("\n[blue]→ Fetching available tool list...[/blue]")

for name, config in self.servers.items():

tools = await self.get_tools_from_server(name, config)

for tool in tools:

self.all_tools.append((name, tool))

def display_tools(self):

"""Display all available tools"""

table = Table(title="Available MCP Tools", show_header=True)

table.add_column("Server", style="cyan")

table.add_column("Tool Name", style="green")

table.add_column("Description", style="white")

# Group by server

current_server = None

for server_name, tool in self.all_tools:

# Only display server name when it changes

display_server = server_name if server_name != current_server else ""

current_server = server_name

table.add_row(

display_server,

tool.name,

tool.description or "No description"

)

console.print(table)

def build_openai_tools(self) -> List[Dict[str, Any]]:

"""Build tool definitions in OpenAI tools format"""

openai_tools = []

for server_name, tool in self.all_tools:

# Build OpenAI function format

function_def = {

"type": "function",

"function": {

"name": f"{server_name}_{tool.name}", # Add server prefix to avoid conflicts

"description": f"[{server_name}] {tool.description or 'No description'}",

"parameters": tool.inputSchema or {"type": "object", "properties": {}}

}

}

openai_tools.append(function_def)

return openai_tools

def parse_tool_name(self, function_name: str) -> tuple[str, str]:

"""Parse tool name to extract server name and tool name"""

# Format: server_name_tool_name

parts = function_name.split('_', 1)

if len(parts) == 2:

return parts[0], parts[1]

else:

# If no underscore, assume it's the first server's tool

if self.all_tools:

return self.all_tools[0][0], function_name

return "unknown", function_name

async def call_tool(self, server_name: str, tool_name: str, arguments: Dict[str, Any]) -> Any:

"""Call the specified tool"""

config = self.servers.get(server_name)

if not config:

raise ValueError(f"Server {server_name} does not exist")

try:

# Prepare environment variables

env = os.environ.copy()

if config.env:

env.update(config.env)

# Create server parameters

server_params = StdioServerParameters(

command=config.command,

args=config.args,

env=env

)

# Use async with context manager (double nesting)

async with stdio_client(server_params) as (read, write):

async with ClientSession(read, write) as session:

await session.initialize()

# Call tool

result = await session.call_tool(tool_name, arguments)

return result

except Exception as e:

console.print(f"[red]✗ Failed to call tool {tool_name}: {e}[/red]")

raise

def extract_text_content(self, content_list: List[Any]) -> str:

"""Extract text content from MCP response"""

text_parts: List[str] = []

for content in content_list:

if isinstance(content, TextContent):

text_parts.append(content.text)

elif hasattr(content, 'text'):

text_parts.append(str(content.text))

else:

# Handle other types of content

text_parts.append(str(content))

return "\n".join(text_parts) if text_parts else "✅ Operation completed, but no text content returned"

async def process_user_input(self, user_input: str) -> str:

"""Process user input and return final response"""

# Build tool definitions

openai_tools = self.build_openai_tools()

try:

# First call - let LLM decide if tools are needed

messages = [

{"role": "system", "content": "You are an intelligent assistant that can use various MCP tools to help users complete tasks. If no tools are needed, return the answer directly."},

{"role": "user", "content": user_input}

]

# Call OpenAI API

kwargs = {

"model": "deepseek-chat",

"messages": messages,

"temperature": 0.7

}

# Only add tools parameter if there are tools

if openai_tools:

kwargs["tools"] = openai_tools

kwargs["tool_choice"] = "auto"

# Use loading effect

with Live(Spinner("dots", text="[blue]Thinking...[/blue]"), console=console, refresh_per_second=10):

response = await self.openai_client.chat.completions.create(**kwargs) # type: ignore

message = response.choices[0].message

# Check for tool invocation

if hasattr(message, 'tool_calls') and message.tool_calls: # type: ignore

# Add assistant message to history

messages.append({ # type: ignore

"role": "assistant",

"content": message.content,

"tool_calls": [

{

"id": tc.id,

"type": "function",

"function": {

"name": tc.function.name,

"arguments": tc.function.arguments

}

} for tc in message.tool_calls # type: ignore

]

})

# Execute each tool call

for tool_call in message.tool_calls:

function_name = tool_call.function.name # type: ignore

arguments = json.loads(tool_call.function.arguments) # type: ignore

# Parse server name and tool name

server_name, tool_name = self.parse_tool_name(function_name) # type: ignore

try:

# Use loading effect to call tool

with Live(Spinner("dots", text=f"[cyan]Calling {server_name}.{tool_name}...[/cyan]"), console=console, refresh_per_second=10):

result = await self.call_tool(server_name, tool_name, arguments)

# Extract text content from MCP response

result_content = self.extract_text_content(result.content)

# Add tool invocation result

messages.append({

"role": "tool",

"tool_call_id": tool_call.id,

"content": result_content

})

console.print(f"[green]✓ Successfully called {server_name}.{tool_name}[/green]")

except Exception as e:

# Add error message

messages.append({

"role": "tool",

"tool_call_id": tool_call.id,

"content": f"Error: {str(e)}"

})

console.print(f"[red]✗ Failed to call {server_name}.{tool_name}: {e}[/red]")

# Get final response

with Live(Spinner("dots", text="[blue]Generating final response...[/blue]"), console=console, refresh_per_second=10):

final_response = await self.openai_client.chat.completions.create(

model="deepseek-chat",

messages=messages, # type: ignore

temperature=0.7

)

final_content = final_response.choices[0].message.content

return final_content or "Sorry, I cannot generate a final answer."

else:

# No tool invocation, return response directly

return message.content or "Sorry, I cannot generate an answer."

except Exception as e:

console.print(f"[red]✗ Error processing request: {e}[/red]")

return f"Sorry, an error occurred while processing your request: {str(e)}"

async def interactive_loop(self):

"""Interactive loop"""

console.print(Panel.fit(

"[bold cyan]MyMCP Client has started[/bold cyan]\n"

"Enter your questions, and I will use available MCP tools to assist you.\n"

"Type 'tools' to see available tools\n"

"Type 'exit' or 'quit' to exit.",

title="Welcome to MyMCP Client"

))

while True:

try:

# Get user input

user_input = Prompt.ask("\n[bold green]You[/bold green]")

if user_input.lower() in ['exit', 'quit', 'q']:

console.print("\n[yellow]Goodbye![/yellow]")

break

if user_input.lower() == 'tools':

self.display_tools()

continue

# Process user input

response = await self.process_user_input(user_input)

# Display response

console.print("\n[bold blue]Assistant[/bold blue]:")

console.print(Panel(Markdown(response), border_style="blue"))

except KeyboardInterrupt:

console.print("\n[yellow]Interrupted[/yellow]")

break

except Exception as e:

console.print(f"\n[red]Error: {e}[/red]")

async def run(self):

"""Run client"""

# Load configuration

self.load_config()

if not self.servers:

console.print("[red]✗ No configured servers[/red]")

return

# Load all tools

await self.load_all_tools()

if not self.all_tools:

console.print("[red]✗ No available tools[/red]")

return

# Display available tools

self.display_tools()

# Enter interactive loop

await self.interactive_loop()

async def main():

"""Main function"""

# Check OpenAI API Key

if not os.getenv("OPENAI_API_KEY"):

console.print("[red]✗ Please set the environment variable OPENAI_API_KEY[/red]")

console.print("Tip: Create a .env file and add: OPENAI_API_KEY=your-api-key")

sys.exit(1)

# Create and run client

client = MyMCPClient()

await client.run()

if __name__ == "__main__":

try:

asyncio.run(main())

except KeyboardInterrupt:

console.print("\n[yellow]Program exited[/yellow]")

except Exception as e:

console.print(f"\n[red]Program error: {e}[/red]")

sys.exit(1)

```

In the above code, we first load the `mcp.json` file, and the configuration format is consistent with Cursor, to obtain all the MCP servers we configured. For example, we configure the following `mcp.json` file:

```json

{

"mcpServers": {

"weather": {

"command": "uv",

"args": ["--directory", ".", "run", "main.py"],

"description": "Weather information server - Get current weather and forecasts",

"env": {

"OPENWEATHER_API_KEY": "xxxx"

}

},

"filesystem": {

"command": "npx",

"args": ["-y", "@modelcontextprotocol/server-filesystem", "/tmp"],

"description": "File system operation server - File reading and writing, directory management"

}

}

}

```

Then in the `run` method, we call the `load_all_tools` method to load the tool list from all servers. The core implementation here is to call the MCP server's tool list, as shown below:

```python

async def get_tools_from_server(self, name: str, config: MCPServerConfig) -> List[Tool]:

"""Get tool list from a single server"""

try:

console.print(f"[blue]→ Connecting to server: {name}[/blue]")

# Prepare environment variables

env = os.environ.copy()

if config.env:

env.update(config.env)

# Create server parameters

server_params = StdioServerParameters(

command=config.command,

args=config.args,

env=env

)

# Use async with context manager (double nesting)

async with stdio_client(server_params) as (read, write):

async with ClientSession(read, write) as session:

await session.initialize()

# Get tool list

tools_result = await session.list_tools()

tools = tools_result.tools

console.print(f"[green]✓ {name}: {len(tools)} tools[/green]")

return tools

except Exception as e:

console.print(f"[red]✗ Failed to connect to server {name}: {e}[/red]")

console.print(f"[red] Error type: {type(e).__name__}[/red]")

import traceback

console.print(f"[red] Detailed error: {traceback.format_exc()}[/red]")

return []

```

The core here is to directly use the client interface provided by the MCP Python SDK to call the MCP server to get the tool list.

Next, we handle user input. Here, the first thing we need to do is convert the obtained MCP tool list into the function tools format that OpenAI can recognize, then send the user input and tools to OpenAI for processing. Based on the return results from OpenAI, we determine whether to call a specific tool. If needed, we directly call the MCP tool, and finally assemble the obtained results and send them to OpenAI to get a more complete response. This entire process is not complicated, and of course, there are many details that can be optimized, more based on our own needs for integration.

Now we can directly test the results:

```bash

$ python simple_client.py

✓ Loaded 1 MCP server configuration

→ Fetching available tool list...

→ Connecting to server: weather

[05/25/25 11:42:51] INFO Processing request of type ListToolsRequest server.py:551

✓ weather: 2 tools

Available MCP Tools

┏━━━━━━━━━┳━━━━━━━━━━━━━━━━━━━━━━┳━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━┓

┃ Server ┃ Tool Name ┃ Description ┃

┡━━━━━━━━━╇━━━━━━━━━━━━━━━━━━━━━━╇━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━┩

│ weather │ get_current_weather │ │

│ │ │ Get current weather information for a specified city │

│ │ │ │

│ │ │ Args: │

│ │ │ city: City name (in English) │

│ │ │ │

│ │ │ Returns: │

│ │ │ Formatted current weather information │

│ │ │ │

│ │ get_weather_forecast │ │

│ │ │ Get weather forecast for a specified city │

│ │ │ │

│ │ │ Args: │

│ │ │ city: City name (in English) │

│ │ │ days: Forecast days (1-5 days, default 5 days) │

│ │ │ │

│ │ │ Returns: │

│ │ │ Formatted weather forecast information │

│ │ │ │

└─────────┴──────────────────────┴──────────────────────────────────────┘

╭────────────── Welcome to MyMCP Client ──────────────╮

│ MyMCP Client has started │

│ Enter your questions, and I will use available MCP tools to assist you. │

│ Type 'tools' to see available tools │

│ Type 'exit' or 'quit' to exit. │

╰─────────────────────────────────────────────────────────╯

You: Hello, who are you?

⠹ Thinking...

Assistant:

╭──────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────╮

│ Hello! I am an intelligent assistant that can help you with various tasks, such as answering questions, checking the weather, providing suggestions, etc. If you have any needs, just let me know! 😊 │

╰──────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────╯

You: How's the weather in Chengdu today? Is it suitable to wear a skirt tomorrow?

⠧ Thinking...

⠴ Calling weather.get_current_weather...[05/25/25 11:44:03] INFO Processing request of type CallToolRequest server.py:551

⠴ Calling weather.get_current_weather...

✓ weather.get_current_weather call successful

⠸ Calling weather.get_weather_forecast...[05/25/25 11:44:04] INFO Processing request of type CallToolRequest server.py:551

⠋ Calling weather.get_weather_forecast...

✓ weather.get_weather_forecast call successful

⠧ Generating final response...

Assistant:

╭──────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────╮

│ Today's weather in Chengdu is sunny, with a current temperature of 26.9°C, humidity at 44%, and light winds, making it very suitable for outdoor activities. │

│ │

│ Tomorrow's (May 25) weather forecast: │

│ │

│ • Weather: Cloudy │

│ • Temperature: 26.4°C~29.3°C │

│ • Wind: 3.1 m/s │

│ • Humidity: 41% │

│ │

│ Suggestion: Tomorrow's temperature is moderate, and the wind is not strong, so wearing a skirt is perfectly fine. However, it is recommended to pair it with a light jacket or sun protection clothing, as the UV rays may be strong on cloudy days. If planning to stay outdoors for a long time, consider bringing an umbrella as a backup. │

╰──────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────────╯

You:

```

From the output, we can see that we can successfully call the tools provided by the configured MCP server.

Connection Info

You Might Also Like

MarkItDown MCP

MarkItDown-MCP is a lightweight server for converting URIs to Markdown.

Context 7

Context7 MCP provides up-to-date code documentation for any prompt.

Continue

Continue is an open-source project for seamless server management.

semantic-kernel

Semantic Kernel is an SDK for building and deploying AI agents and systems.

Github

The GitHub MCP Server connects AI tools to manage code, issues, and workflows.

Playwright

A lightweight MCP server for browser automation using Playwright, enabling...